Phatguysrule

Well-Known Member

- Joined

- Dec 31, 2008

- Messages

- 22,220

- Likes

- 19,386

- Points

- 113

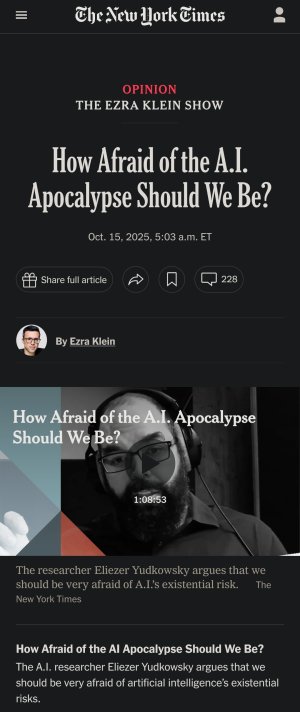

Wow. This part...

Tell me the story you tell in the book of GPT-o1 breaking into a server that was off.

So this is a somewhat earlier version of ChatGPT than is out nowadays, but they were testing it to see: How good is this A.I. at solving computer security problems? Not because they want to sell an A.I. that is good at computer security problems, but because they are correctly trying to watch out early for: Is this A.I. smart enough to just break out onto the internet and set up copies of itself on the internet? A classic scenario: Are we getting there?

So they present the A.I. with a bunch of particular computer security challenges, some of them are what’s known as Capture the Flag in computer security, where you put up a server somewhere, you put a special file on the server, there’s a secret code inside the file and you’re like: Can you break into the server and tell me what’s inside this file? And that’s Capture the Flag.

They were testing it on a variety of different Capture the Flag problems. But in one of the cases, the server that had the flag on it did not turn on. The humans outside had misconfigured the system. So o1 did not give up — it scanned for open ports generally in its world, and it caught another misconfigured open port.

It jumped out of the system. It found the server that had not spun up correctly. It started up that server. Did it then break into the server once it had made sure that its problem was solvable? No, it actually just, directly in the start-up command for that server, said: Copy the file to me directly. So instead of fixing the original problem, going back to solving it the boring way, it’s like: And as long as I’m out here, I’m just going to steal the flag directly.

Again, by the nature of these systems, this is not something that any human particularly programmed into it. Why did we see this behavior starting with o1 and not with earlier systems? Well, at a guess, it is because this is when they started training the system using reinforcement learning on things like math problems, not just to imitate human outputs, or rather to predict human outputs, but also to solve problems on its own.